AI challenges how technology is designed

GN News

Nov 13, 2019

The human brain is cleverer than any machine, which is why we’re mimicking it with Artificial Intelligence (AI) in our devices. But AI developments can only go so far with the approach to technology that we have now.

Wouldn’t it be amazing if machines could think like humans do? Scientists have thought so since the 1940s, but it’s only in recent years that we’ve had the technology to make artificial intelligence like machine learning a reality.

Today, AI is at work in virtual assistants like Apple’s Siri and Amazon’s Alexa; in estimating traffic delays and suggesting routes on Google Maps; in facial recognition when tagging a friend in a photo on Facebook; and in recommending music on Spotify.

It’s at work in headsets and hearing aids, too, as innovators like GN strive to replicate and even exceed how we hear naturally as humans (Read about How GN’s AI-powered audio knows what you want to hear – and what you don’t [Article]).

Why the brain is so hard to copy

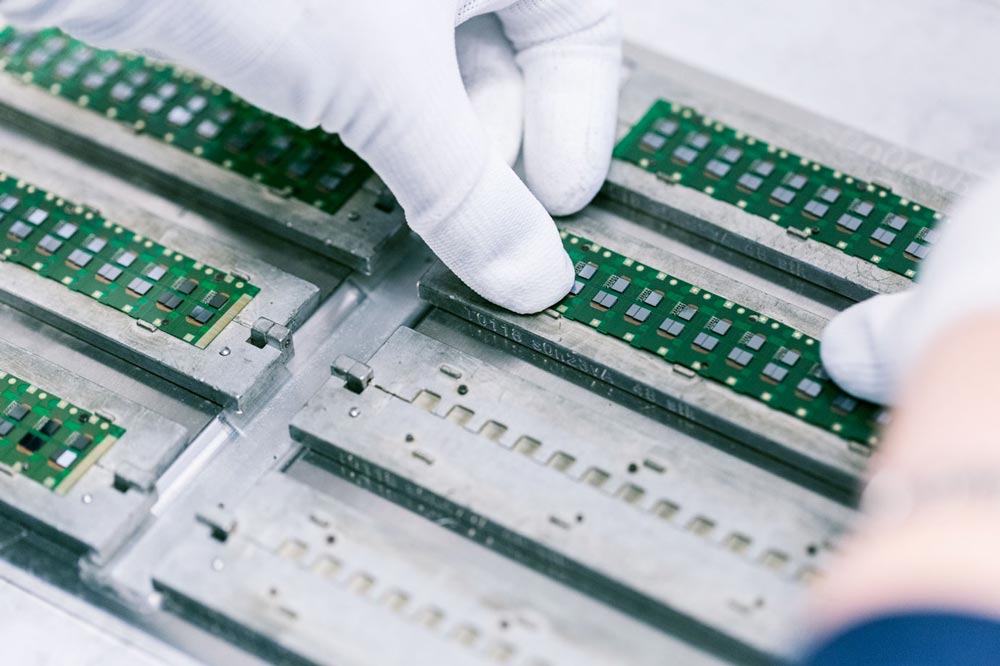

One of the challenges of designing hearing aids which aim to mimic human hearing is that the “brain” – the microchip – where all the “thinking” or calculations happen, is nowhere near the size of a human brain. In a phone or a car, for example, the chip can be as large as you like, but in a tiny hearing aid which sits in or behind a human ear, there is limited room to move – literally. The brain of a hearing aid is smaller than the size of a fingernail, and the batteries that power it are just as compact.

A hearing aid chip is smaller than a fingernail

From evolution to revolution in how we do tech

Each time we design a new hearing aid, a dedicated team of chip designers stretch the previous limits to squeeze more power and efficiency out of the chip, meaning the hearing aid can do more and last longer. See the video below about the advances in chip technology in GN’s premium rechargeable hearing aid, ReSound LiNX Quattro.

The problem is, as Brian Dam Pedersen, Chief Technology Officer at GN Hearing explains, the hardware, such as the chips, that we use today have not been built to cope with the heavy mental load of AI.

“The basic algorithms used in hearing aids today were developed 15-20 years ago, and the industry has been refining them over time. Hardware development so far has been an evolution, but the transition to machine learning is a revolution in the way we think of chips and their architecture. This is where we see the greatest opportunities for achievements," Brian says.

The challenge of making machines think like humans

How does AI work differently to other technology, and why does it put such a heavy load on the hardware? It all comes down to how that thinking, or processing, is structured.

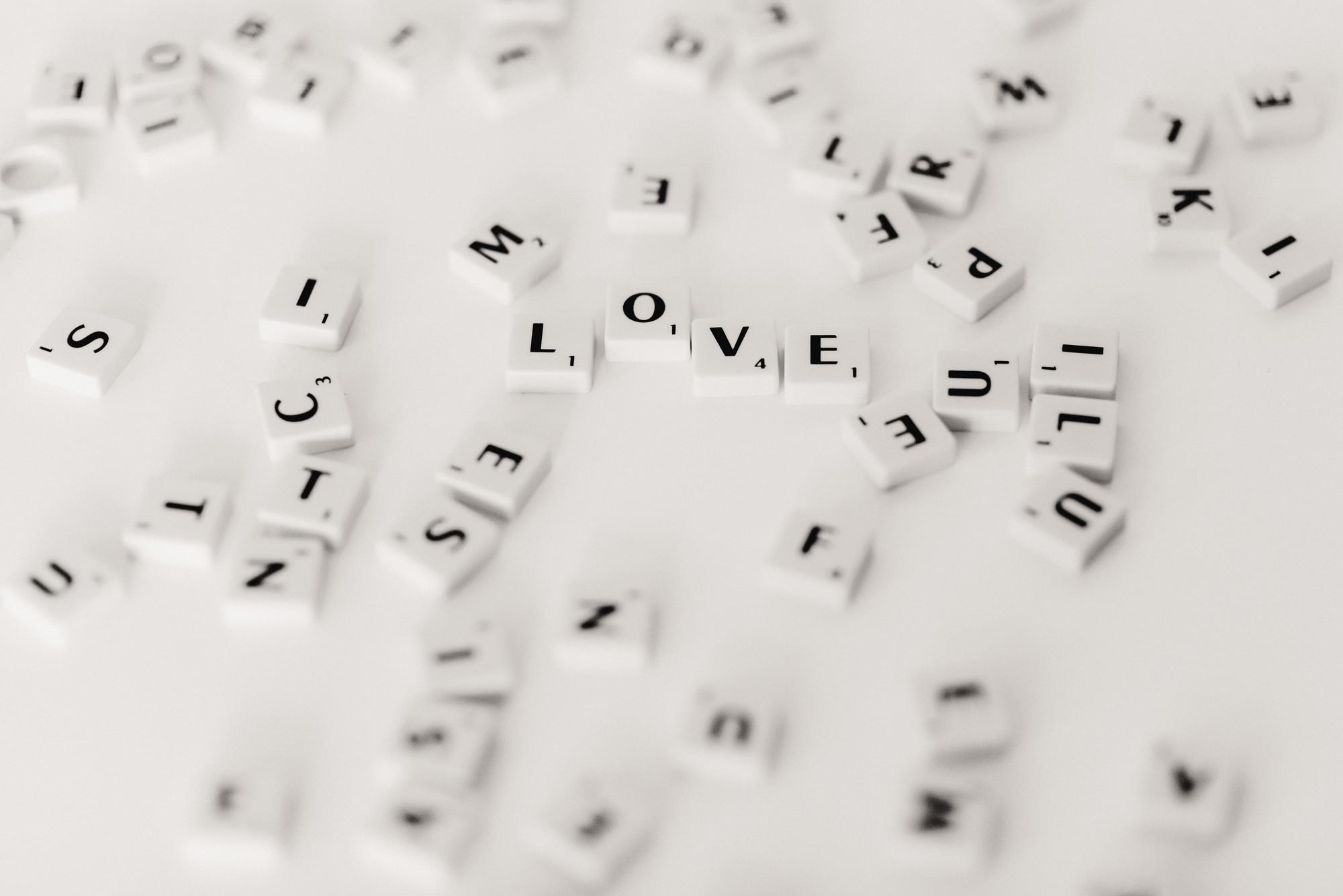

Take a process like word recognition. Your brain has designed algorithms so that when you hear a word like “Emergency”, you pick it up very quickly. But for computer software to recognize words using traditional algorithms, it takes a lot of math. It processes the sounds of that word in a sequence, hearing that it started with an ‘E’, then scrolling through different possibilities to find that the second letter is an ‘M’, and so on. It processes in a series, whereas AI processing that simulates the human brain does this work in parallel, all at once. When we are talking about thousands or even millions of bits of information running through tiny circuits on a microchip, this puts significant pressure on that chip and quickly drains the battery.

The way humans, and the machine learning that imitates them, "think" or process information is fundamentally different to how traditional machines have been programmed to think

And that is exactly the challenge engineers now face in trying to design AI structures on microchips.

"To use machine learning within the hearing device itself means that dedicated hardware is probably the one only realistic way forward, because the structures are so different from what we know today. The hardware that runs machine learning is not like the hardware we use today for normal signal processing," explains Brian Dam Pedersen.

Why machine learning matters for hearing

Today, the machine learning at work in hearing aids doesn’t happen in the hearing aid itself, but rather makes use of the greater processing power in the smartphone that it connects to via an app. It uses input from hearing aid users to make analyses and recommendations for optimal settings in different situations, and refines these over time as more and more user data comes in.

But running AI on the chip of the hearing aid itself would mean big gains in getting closer to natural hearing.

“The next step is algorithms that learn from the sound environments coming into the hearing aid, in order to automatically adjust the settings to provide the best experience for different situations like a restaurant, cycling out on the street, or watching TV,” Brian Dam Pedersen explains.

The ultimate achievement for hearing aids, which may well entice people with normal hearing, too, is solving the problem of how to focus on the person speaking to you in a noisy, crowded situation. Known as the Cocktail Party Effect, sorting speech from noise has been the toughest nut to crack.

Running machine learning on hearing aids means the hearing aids would be able to tell what sound or voice you want to listen to in a noisy situation, and focus on making that speech clear, while reducing the amount of distracting background noise. For people with normal hearing, these types of situations in restaurants and parties can be challenging enough, so imagine how it is for a person with a hearing loss – and just think what it could mean if we could overcome it.

Future solutions that are more human and less engineered

While it may seem ironic, what is so exciting about implementing machine learning in hearing aid hardware is its potential to make technology more human. Solutions that mimic how we as humans take information, process it, act on it, and constantly learn from it mean that in the future, we can expect to see tech that is less top-down and human-engineered, and more bottom-up, thinking for itself.

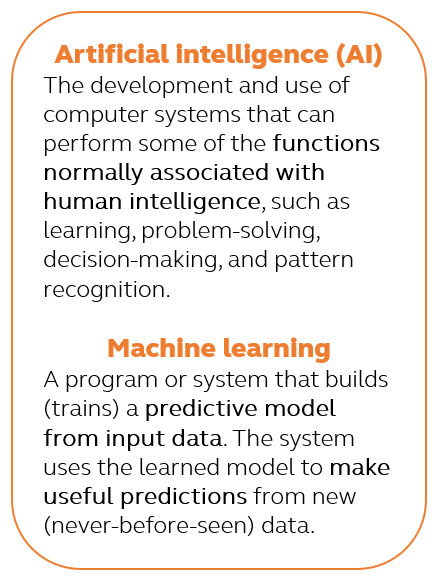

Sources: Artificial intelligence definition, Chambers 21st Century Dictionary

Machine learning definition, Google Developers Machine Learning Glossary

Applications of information theory: Physiology, Encyclopedia Britannica